The rapid development of Artificial Intelligence (AI) continuously brings forth innovative solutions that transform our daily lives and the business world. One of these remarkable developments is the latest LLM from Hugging Face: SmolLM2. These compact language models offer impressive performance and set new standards in local AI processing. In this post, we highlight the key characteristics of SmolLM2, its practical applications, and the potential benefits for medium-sized companies.

What is SmolLM2?

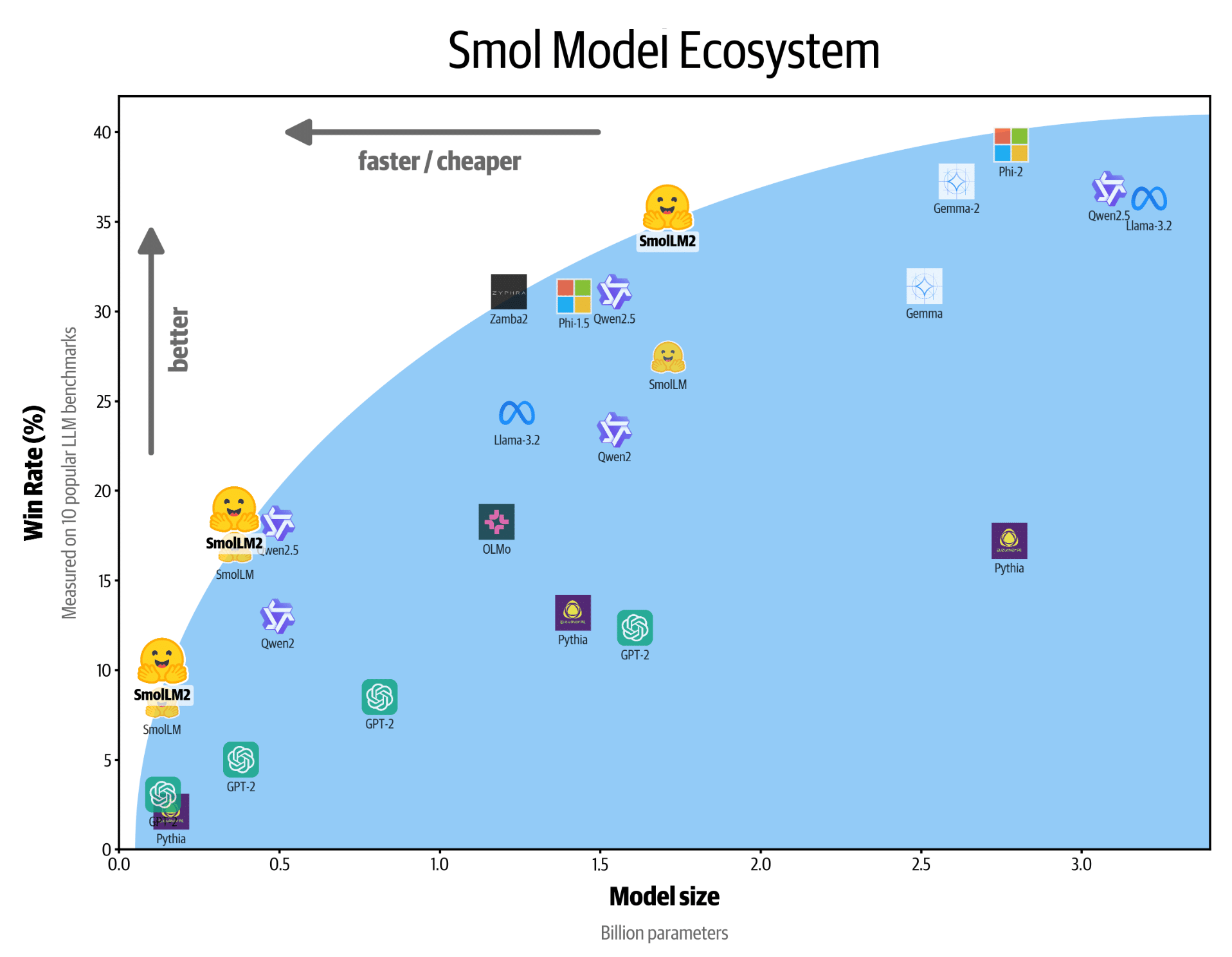

SmolLM2 is a series of small language models developed by Hugging Face. These models combine high performance with low resource usage, making them ideal for use on local devices. Compared to large models like GPT-4, which comprises hundreds of billions of parameters, SmolLM2 uses only 1.7 billion parameters. Despite this reduction, SmolLM2 even outperforms larger models from Meta in some tests, underscoring its efficiency and power.

Key Features of SmolLM2

1. Compact Size and High Efficiency

SmolLM2 is available in three sizes: 135 million, 360 million, and 1.7 billion parameters. This compact design enables use on devices with limited resources such as smartphones, laptops, and IoT devices, without sacrificing performance.

2. Superior Performance

The largest model in the series, SmolLM2-1.7B, outperforms even larger models from Meta in several cognitive benchmark tests. Particularly impressive is the performance in areas such as scientific reasoning and everyday logic, where SmolLM2 achieves excellent results.

3. Local Processing Without Cloud Dependency

One of the biggest advantages of SmolLM2 is the ability to run AI applications directly on the end device. This eliminates dependency on cloud services, which not only reduces latency but can also offer significant data protection benefits.

4. Public Licensing

Hugging Face provides SmolLM2 under the Apache 2.0 license. This promotes democratization of AI development as companies and developers can freely access the models and adapt them to their needs.

Practical Applications of SmolLM2

1. AI on Edge: Intelligent IoT Devices

The ability to run powerful AI models locally opens new possibilities for the Internet of Things (IoT). SmolLM2 can be implemented directly on IoT devices to perform real-time analyses and decision-making processes. Examples include:

- Smart Home Systems: Intelligent assistants that recognize voice commands and process them directly on the device without sending data to the cloud.

- Industry 4.0: Machines in production lines can perform local error analysis and make autonomous decisions, increasing efficiency and reliability.

2. Mobile Applications: Powerful AI on Your Smartphone

Modern smartphones have powerful GPUs that enable the execution of complex AI models. With SmolLM2, applications can run directly on the device, offering numerous benefits:

- Data Protection: Sensitive data doesn’t need to be sent over the internet, increasing user security and privacy.

- Offline Functionality: Applications remain functional even without an internet connection, which is particularly advantageous in areas with poor network coverage.

- Faster Response Times: Since processing occurs locally, response times are significantly shorter, improving user experience.

3. Enterprise Solutions: Efficient and Secure AI in Business Operations

For medium-sized companies that need reliable and cost-effective AI solutions, SmolLM2 offers numerous advantages:

- Cost Savings: Local processing eliminates costs for cloud services and associated infrastructure costs.

- Data Security: Companies can securely process sensitive business data on their own devices without the risk of data leaks in the cloud.

- Scalability: SmolLM2 enables easy scaling of AI applications without the need to invest in expensive hardware or expensive cloud resources.

Benefits of Using SmolLM2 for Your Company

1. Democratization of AI Development

SmolLM2 lowers the barriers to entry for companies wanting to use AI. With compact models, even smaller companies and independent developers can implement powerful AI applications without relying on expensive infrastructure.

2. Reduced Environmental Impact

Smaller models like SmolLM2 require less computing power and energy, contributing to a reduction in the ecological footprint of AI applications. This is not only cost-effective but also supports sustainability goals.

3. Flexibility and Adaptability

SmolLM2 models can easily be adapted to specific use cases. Whether for natural language processing, text summarization, or function calls – SmolLM2 offers the flexibility to meet various requirements.

Challenges and Outlook

Although SmolLM2 shows impressive progress, there are still challenges to overcome. The models are currently primarily oriented toward English and may be less capable in other languages. Additionally, there is the possibility that the models don’t always deliver factually correct or logically consistent results.

Nevertheless, the introduction of SmolLM2 marks an important step toward more efficient and accessible AI. The ability to run powerful models locally could significantly influence the future of practical AI and enable broader use across various industries.

Conclusion: Is SmolLM2 the Future of Practical AI?

SmolLM2 from Hugging Face represents a groundbreaking development in the field of AI. The combination of high performance, compact size, and the ability for local processing makes these models an attractive option for medium-sized companies. By democratizing AI development and reducing costs and environmental impact, SmolLM2 offers a promising future for practical and accessible AI applications. Nevertheless, there will be use cases where you need a central cloud solution and lots of GPU power. There is no “one fits all” solution in the AI context. In the end, the problem must be analyzed and an AI engineer must develop the right solution. If you’re thinking about how AI can advance your company, SmolLM2 could be the key to efficient and secure implementation if you don’t want to process any data in the cloud or have very low latency requirements.