In today’s digital era, Artificial Intelligence (AI) has become indispensable in many business processes. Whether in the automotive industry, healthcare, or security monitoring – AI systems optimize workflows, improve efficiency, and enable innovative solutions. However, the more companies rely on AI, the greater the threats to these systems become. A particularly striking danger is Adversarial Attacks – targeted manipulations designed to deceive AI models and impair their functionality. In this post, we examine what Adversarial Attacks are, how they work, and what measures companies can take to protect themselves.

What are Adversarial Attacks?

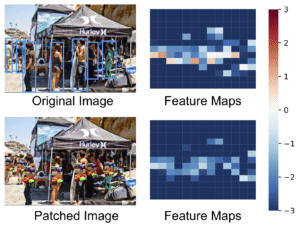

Adversarial Attacks are targeted attacks on AI systems where small, often barely perceptible changes are made to input data. These changes aim to confuse AI models and produce incorrect results. A common example is found in image classification: A small pattern applied to an image can cause the AI system to misidentify an object or fail to recognize it entirely.

These attacks are particularly dangerous because they often involve minimal changes that are barely detectable to the human eye. Nevertheless, they can have significant impacts on the performance and reliability of AI systems.

The Study: Adversarial Attacks on Modern Object Detection Systems

An insightful study on this topic is “Making an Invisibility Cloak: Real World Adversarial Attacks on Object Detectors.” This research illuminates the vulnerability of modern object detection systems to Adversarial Attacks and shows how these attacks work in practice and what consequences they can have for companies.

Study Objectives

The researchers had the following main goals:

- Transferability of Attacks: Investigating how well Adversarial Attacks transfer across different models, classes, and datasets.

- Real-World Applications: Development and testing of physical attacks, for example through printed patterns on clothing or posters.

- Effectiveness Assessment: Quantifying the success rates of these attacks under different conditions and against various detection systems.

- Vulnerability Identification: Identifying which factors influence the effectiveness of attacks.

Methodology

The researchers used YOLOv2, a well-known object detection model, to train specific patterns that prevent or complicate the detection of objects like people. These patterns were tested both digitally and physically to assess the robustness and transferability of the attacks. Various environmental factors such as lighting, camera angle, and distance were considered to create realistic test conditions.

Study Results

The study results show that Adversarial Attacks are indeed capable of significantly disrupting modern object detection systems. In particular, the YOLOv2 model showed high vulnerability to the developed attack patterns. By applying the patterns, researchers could drastically reduce the detection rate of people without this being obvious to the human eye.

Effectiveness in the Digital World

In digital simulations, the attacks could reduce Average Precision (AP), a measure of object detection accuracy, by up to 70%. This illustrates how effective these attacks can be when conditions are controlled.

Physical Attacks

In real environments, such as through printed posters or patterns applied to clothing, the study authors could also significantly impair detection systems. While effectiveness in the physical world was lower than in digital simulation, the results still represented a serious threat, especially in security-critical areas such as access control or autonomous driving.

Risks for Companies

The findings of this study highlight the security vulnerabilities of AI systems that companies operate. Especially in industries where object detection and classification are vital, Adversarial Attacks can have severe consequences.

Manipulation of Security Cameras

Attackers could deliberately apply patterns to hide their presence from surveillance systems. This could pose a significant security risk in sensitive areas such as warehouses, production facilities, or office buildings.

Autonomous Driving

In the automotive industry, such attacks could impair the functioning of autonomous vehicles. Malfunctions in object detection could cause vehicles to fail to recognize obstacles or misidentify them, potentially leading to accidents.

Access Control Systems

Companies relying on AI-powered access control systems could have their security measures bypassed through Adversarial Attacks. This poses a significant risk, especially for companies with valuable or sensitive data.

Measures to Protect Against Adversarial Attacks

Given the growing threat of Adversarial Attacks, it is essential for companies to take proactive measures to protect their AI systems.

Implementing Explainable AI (XAI)

Explainable AI (XAI) enables better understanding and tracing of AI system decisions. Through transparency in decision-making processes, anomalies or suspicious patterns can be detected and investigated more quickly.

Regular Security Audits

AI models should be continuously tested and updated for vulnerabilities. This includes both software updates and verification of training datasets to ensure no manipulated data has been injected.

Using More Robust Algorithms

Developing and implementing more robust algorithms is crucial to making AI systems more resistant to Adversarial Attacks. This can be achieved through models specifically designed to detect and defend against such attacks.

Employee Training and Awareness

Employees should be regularly trained to understand the importance of AI security and recognize potential threats early. An informed workforce can significantly contribute to closing security gaps. At innFactory AI Consulting, we regularly offer both AI Officer training and support you from the start with your AI challenges.

Best Practices for Protection Against Adversarial Attacks

In addition to the measures mentioned above, there are several best practices that companies should implement to protect their AI systems:

- Model Diversification: Using multiple AI models in a system can reduce the likelihood that a single Adversarial Attack compromises the entire system.

- Data Validation: Thorough verification of input data can help detect and block manipulated data.

- Anomaly Detection: Anomaly detection systems can identify unusual patterns that indicate a potential attack.

- Sensitive Data Encryption: Encrypting data transmitted to AI systems protects against unauthorized access and manipulation.

- Regular Audits: Regular audits allow companies to identify and fix vulnerabilities before they can be exploited. For security-critical systems, you should consider ISO42001 in addition to ISO27001.

Conclusion

The study “Making an Invisibility Cloak” underscores the dangers of Adversarial Attacks on modern AI systems. Despite advanced technologies like object detection systems, the results show that even proven models like YOLO can be vulnerable to targeted attacks. These findings highlight the urgency of investing in the security and robustness of AI systems.

For managers and executives of medium-sized companies, this means that implementing AI solutions is not just a technical but also a strategic concern. Explainable AI, continuous security audits, and robust algorithms are essential to ensuring the integrity and reliability of AI applications.

Although the studied YOLOv2 model was released over four years ago, the demonstration of its vulnerability remains an important reminder of the need for ongoing improvements in AI security. Companies must act proactively to protect their systems from potential threats while fully leveraging the benefits of AI technologies.

Contact us to learn more about how we can help you design your AI strategy securely and future-proof.